What is DSC?

DSC is an application for collecting and analyzing statistics from busy DNS servers. The application may be run directly on a DNS node or may be run on a standalone system that is configured to "capture" bi-directional traffic for a DNS node. DSC captures statistics such as: query types, response codes, most-queried TLDs, popular names, IPv6 root abusers, query name lengths, reply lengths, and much more. These statistics can aid operators in tracking or analyzing a wide range of problems including: excessive queriers, misconfigured systems, DNS software bugs, traffic count (packets/bytes), and possibly routing problems.

What does it look like? What does it produce?

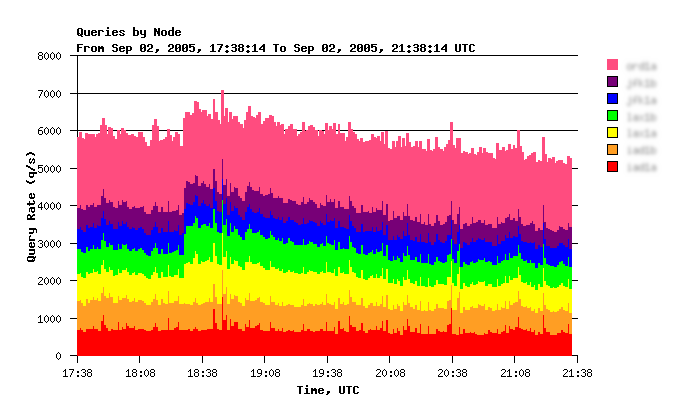

DSC is highly visual. To get a sense of this, have a look at the Tools catalog page describing DSC in greater detail. Here's a teaser:

How is data collected and managed?

DSC uses distributed system for collecting, visualizing, and archiving DNS measurements. The collector may run on the DNS system, or on a separate system connected to a span/mirror port on a switch. The collector captures messages sent to/from a DNS server (using libpcap) and stores the datasets to disk every 60 seconds, as XML files. A cron job copies the XML datasets to the presenter (which may run on the same system as the collector, or may run on a separate system. (You may run the collector only, and send the datasets to the OARC presenter system for processing and graphical display of data)

The presenter receives XML datasets from a collector(s). Since XML parsing is rather slow on older hardware, another process—the extractor—converts the XML datasets to a line-based text file. The presenter uses a cgi script to display data in a web browser, which lets you choose different time scales, nodes in a cluster, and different dataset keys.

DSC can store data indefinitely, providing you with long-term, historical statistics related to your DNS traffic. In addition to DSC's operational utility, you will also find the historical data very useful for research, whether you share the data with OARC, researchers, or your own internal engineering or security groups.

How is data secured between collector and presenter?

DSC currently supports three secure data transmission schemes:

- Over HTTP+SSL with X.509 certificates

- Over SSH to custom login shell application

- Over SSH with rsync

What are the hardware requirements?

In general the hardware requirements depends on the load seen by the DNS servers you monitor. The collector process is (currently) relatively lightweight. It can be a "medium-sized" box. The presenter system does more work and should be a "large-sized" system.

What are the operating system requirements?

DSC runs best on FreeBSD and Linux. It also runs well on Solaris.

How often is the data updated?

The collector writes XML files every 60 seconds. A cron job on the collector sends them to the presenter a few seconds later.

The presenter usually processes new data with a cron job that runs every five minutes. Thus, the data display on the presenter is usually delayed no more than six or seven minutes.

How much disk space does it require?

Collector: Under normal operation the collector requires very little disk space. However, if the collector is unable to talk to the presenter, the XML files are buffered for future transmission. The amount of disk space on the collector determines how much data can be buffered in the event of a network outage. In most situations, 1GB of local disk space should be plenty.

Presenter: The presenter stores data permanently. For a typical, busy DNS server, you should plan on consuming about 1GB of space every four months.

How well does this software scale for larger installations?

A number of DNS-OARC members use this software for their own internal purposes. At times, there have been scaling issues encountered with the amounts of data collected and in particular having one presenter process it all. At times there is so much data that the presenter/grapher system falls behind, make it appear that it isn't working when upon closer inspection it is days or weeks behind. It has been found that a good practice is to organize your installation using the following guidelines:

- Instead of having the processing done at the presenter which can overload it with too much unprocessed data, have the DNS servers themselves do the processing of data into XMLs

- Since the DNS servers must upload raw data anyways, upload the processed output (the XML files) instead to the presenter

- Use the central presenter (the system that does the graphing) only as a presentation system containing all the processed XMLs. Do no processing on that system.

The method works very well for some members that run root servers around the world. Another and more advanced method is to compile the dsc-xml-extractor perl script using perl's binary compiler. The resulting binary was found to process the collector data at least a magnitude faster than the perl script version.

How can I contribute DSC data?

OARC is always happy to receive DSC data from members and non-members alike. We prefer to use either raw ssh or rsync upload methods, both of which require an SSH public key from the contributor.

Where do I get the DSC software?

DSC is developed and maintained by DNS-OARC. Get tarballs/packages, installations instructions or changelogs from our download page:

All development for the DSC software are done on Codeberg:

Is there a discussion venue for DSC users? Developers?

DNS-OARC runs one mailing list for DSC announcements, users and developers. Open discussion is encouraged and there is no such thing as a dumb question. Support for the software is conducted through those mailing lists.

How to use SSH as a DSC transport

A DSC collector stores files in /usr/local/dsc/run/node/upload/dest. For example, on c-root's ORD1A node there's a /usr/local/dsc/run/c-ord1a/upload/oarc/ directory. The files in this directory have to get back to OARC somehow, and the normal ways to do that are to use rsync or curl. Since rsync is a file-based tool, the sender has to know where on the destination server the files will go, which requires unfortunate synchronization between the server and client sides. Since curl uses X.509 certificates and talks to a web server, there is an unpleasant lot of certificate management to be done and the files being transmitted have to be aggregated into tar-balls. As an alternative to this, the ssh protocol can be used. OARC runs a customized sshd on the upload.dsc.dns-oarc.net virtual server, and this is the way PCAP files are uploaded. To use ssh for uploading DSC data, three things must be done. First, you need upload-ssh.sh, which should be attached to this web page. Put this in /usr/local/dsc/libexec/ on each client that will use ssh to upload DSC data. Second, you'll need an ssh identity file. This can be the same one you use to upload PCAP files or it can be a different one. Put this in /usr/local/dsc/certs/dest/node.id. For example, /usr/local/dsc/certs/oarc2/c-ord1a.id. Third, have the cron job that currently calls upload-x509.sh (or perhaps upload-rsync.sh call upload-ssh.sh instead. For example, sh libexec/upload-ssh.sh c-ord1a oarc2 oarc-cogent@upload.dsc.dns-oarc.net. As always, if you have any questions, contact OARC.

How To Upload PCAP Files To OARC

Members can upload PCAP files to OARC for analysis by stylized use of the SSH2 protocol. Files must be in tcpdump format, compressed using gzip. Storage will be organized by submitting member, by member's site or server identifier, and by the timestamp of the first PCAP record in the stream. Submitting members will use an existing trusted path (such as PGP secured e-mail) to submit an SSH2 key (RSA or DSA) in OpenSSH "authorized_keys" format to the OARC secretariat. It is recommended that this key not be used for any other purpose, since it is likely that the key will not have a pass phrase. More than one SSH2 public key can be submitted by a member, and by default, all will be active. The server name is upload.dsc.dns-oarc.net, and the login name will be assigned on a per-member basis by the OARC secretariat. Since authentication is by public/private key, there will be no password. Note that the SSH2 server does not support compression, since the PCAP stream will have been compressed with gzip before transmission. The command used will be pcap, with one mandatory argument, that being the identifier of the site or server where the PCAP stream originated. For example, this command will send one PCAP file to the OARC collector:

ssh -i oarc-member.id oarc-member@upload.dsc.dns-oarc.net pcap servername < file.tcpd.gz

If the exit code from ssh is zero (indicating success), then the output will be the word MD5 followed by a 32-digit message digest and a newline character. Any other exit code indicates an error, in which case the output will be text explaining the error condition. The following shell script can be used to upload all tcpdump files from a directory, removing each one as it is verified to have been successfully transferred:

#!/bin/sh

ssh_id=./oarc-member.id

ssh_bin=/usr/bin/ssh

remote=oarc-member@upload.dsc.dns-oarc.net

site=`hostname | sed -e 's/\..*//'`

for file in oarc.tcpd.*.gz

do

ls -l $file

output=$($ssh_bin -i $ssh_id $remote pcap $site < $file)

if [ $? -eq 0 ]; then

if [ "$output" = "MD5 `md5 < $file`" ]; then

echo OK

rm $file

fi

fi

done

exit

Note that since the server-side file name will be automatically chosen based on the timestamp of the first record in the PCAP stream, it is not necessary to upload these files in chronological or any other order.